Beware of duplicate texts on the web

Duplicate product categories

The bad structure of a website can create duplicate pages just for the specific keywords that you focus on in SEO. For example, we present the categories of men and women. In each of these categories, there is a product page titled shoes that has a different URL but the same headlines, titles and products. Google evaluates the categories as duplicates and may not index them, so they will not appear in search.

This problem has a relatively simple solution. Set up an automatic generation of captions and unique descriptions based on available CMS and website options. Make sure each category has unique content, then test the page in the Google Search Console.

Captions and duplicate headings

Title or headlines are the descriptor of the pages and briefly characterize their content. They are important as they are the first signal for deciphering what is on the page and whether the page is of interest to the user. Captions are a factor that significantly affects search results, so they should include the main keyword that the page focuses on.

In the case of duplicate titles, the value may be divided between different pages, which will cause a significant reduction in this value. Unique headings and their correct structure are also important factors. We recommend creating general titles and headlines for important pages manually. For others, we can use automatic generation.

PDF files and documents

PDF files and all documents stored on the server are also indexed. As for the files with unique content that are not on the site (such as the price list), this is fine. However, if they are documents with the same content, they can compete with important pages, and the value for the keyword is redistributed. We recommend that you simply disable such sites from being indexed in a robots.txt file

Example:

Disallow: /*.pdf

Duplicate image descriptions

Images are often underestimated, but they are a quality source of organic traffic, especially for e-shops with a large number of categories and products. Try to make the alt and title description of the images unique but also relevant.

Unlabeled mobile sites

Your website doesn’t have a responsive display for mobile phones, so you’ve decided to start a new site that’s fully optimized for these devices? Well done. However, keep in mind that its contents are likely to be exactly the same as on the desktop page. For websites of this type, we recommend adding a link rel = “canonical” tag to the relevant, main page.

We will add the canonical tag to the mobile page:

<link rel = ”canonical” href = ”https://bastadigital.com/” />

We will add information about the mobile version to the desktop page:

<link rel = ”alternate” media = ”only screen and (max-width: 640px)” alternate = ”https://m.bastadigital.com/” />

Tip: Duplicates are also created by pages designed for printing (print pages). In this case, we recommend using print styles (CSS) and redirecting old print pages via status code 301.

Indexing of parameters, special characters and search

Various filters that have duplicate content, UTM, and other parameters are indexed and severely limit the available crawl budget of the website. Crawl budget determines the time for search engines to gather extensive information from site URLs. After collecting the information, they then pass it on to the search engines, which will convert the information into search results, as we see it. Search engine robots have a maximum amount of time that they can spend on a website so as not to burden website hosting.

If a website has thousands of URLs, the search engine crawler will not be able to collect all the URLs in a limited amount of time. Therefore, important pages may not be indexed. In addition to this, each parameter page is evaluated as unique and creates duplicate content. We can solve both problems by adding the tag link rel = “canonical”.

Example of an indexed parameter:

The Canonical version will look like this:

<link rel = ”canonical” href = ”https://bastadigital.com/kompletny-marketing/” />

Pagination

Poor paging is one of the most common problems of modern e-shops. The description can be found on all pages that are unique to Google, creating duplication. If we want the content of all pages to be indexed without duplication, we need to follow a certain procedure. The correct paging can be set in the source code in the <head> tag:

The correct page 2 setting on your blog looks like this:

<link rel = ”canonical” href = ”https://bastadigital.com/blog/page/2/” />

<link rel = ”next” href = ”https://bastadigital.com/blog/page/3/” />

<link rel = ”prev” href = ”https://bastadigital.com/blog/page/1/” />

Canonical will determine which is the major version of a given page. The “prev” and “next” elements determine which page is previous and which page follows. Google has “forgotten” the “prev” and “next” elements, according to recent reports, but recommends adding them for a possible future update.

Leave the category description only on the main page, e.g. at https://bastadigital.com/blog/

Tip: The number of products in e-shops is usually variable, so don’t forget to redirect the rule that has no products to the last existing product page. This will avoid “endless paging”.

Example: If page 10 does not exist, but 8 does, redirect with status code 302 to page 8 (temporary redirect).

Language mutations and hreflang

Google distinguishes the language in which the page is written. It will then offer these results to users in a specific country or according to their preferred language. However, sometimes it may not recognize the correct language mutation, and one content in two languages will appear in the search. The solution to this situation is offered by the hreflang tag, which indicates the correct version of the language mutation.

Example of a correctly used hreflang tag:

<link rel = ”alternate” hreflang = ”sk” href = ”https://www.bastadigital.com/sk/” />

<link rel = ”alternate” hreflang = ”cs” href = ”https://www.bastadigital.com/cz/” />

<link rel = ”alternate” hreflang = ”en” href = ”https://www.bastadigital.com/en/” />

The pages for the category would look like this:

<link rel = ”alternate” hreflang = ”sk” href = ”https://bastadigital.com/sk/kompletny-marketing/” />

<link rel = ”alternate” hreflang = ”cs” href = ”https://bastadigital.com/cz/kompletni-marketing/” / ”>

<link rel = ”alternate” hreflang = ”en” href = ”https://bastadigital.com/en/complete-marketing/” />

Hosting settings

The website may have several versions (version with www, without www, with slash, without slash…), which Google considers to be separate, original web pages and assumes unique content. Different versions of a website can usually be routed directly in the hosting via the control panel in section A of the records or via CNAME.

Http and https versions

If you have added an SSL certificate to the page, don’t forget to do the same for the old version of the website. Websites with http: // are still indexed and displayed in search. You can usually redirect these URLs in the hosting administration or by a simple rule in the htaccess file:

RewriteEngine on

RewriteCond %{HTTP:X-Forwarded-Proto}

RewriteRule ^.*$ https://%{SERVER_NAME}%{REQUEST_URI} [L,R]

Version www, without www, with slash and without slash

The choice of the preferred version is up to you, but the second version must be redirected via status code 301. Some versions of the CMS have a redirection preset, for some you have to help yourself. When redirecting the version with www or without www, you can also use domain CNAME records or add a rule in the htaccess file:

Redirect to www version:

RewriteEngine On

RewriteCond% {HTTP_HOST}! ^ Www . [NC]

RewriteRule ^ (. *) $ Https: //www.% {HTTP_HOST} / $ 1 [R = 301, L]

Redirect to version without www:

RewriteEngine On

RewriteCond% {HTTP_HOST} ^ www . [NC]

RewriteRule ^ (. *) $ Https: //% {HTTP_HOST} / $ 1 [R = 301, L]

Redirect to slashed version:

RewriteEngine on

RewriteCond% {REQUEST_FILENAME}! -F

RewriteRule ^ (. * [^ /]) $ / $ 1 / [L, R = 301]

Redirect to slash-free version:

RewriteEngine on

RewriteCond% {REQUEST_FILENAME}! -D

RewriteRule ^ (. *) / $ / $ 1 [L, R = 301]

File /index.php

The index.php file is, in most cases, located on every subpage of your site. This file is located at a separate URL, creating unwanted duplicate content. It is recommended to add the link rel = “canonical” tag to the file, or redirect it using status code 301. However, when redirecting, you must be careful not to create a “redirect loop”, which you can solve by URL detection.

The subdomain competes with the main domain

Existing blogs or content on a subdomain can dissipate domain strength in some cases. A blog should only be on a subdomain if its content is unique and does not fall into the same category as the main website. Google sees the page on the subdomain as unique and especially separate.

External duplications

Other sites copy my articles

If your text has been copied and Google indexes it before your content, it may consider it original. The solution to the problem with copying a page is the early indexing of your page, e.g. via the Google Search Console.

Tip: copying texts is not worthwhile

Every website should focus on creating quality content that, in addition to marketing purposes, will create added value for the reader. Anyone who has come across content creation knows that it is not easy. Although copying content from an external website is a relatively simple “strategy”, it will not work.

Quick tips for removing duplicates

Brand “canonical”

<link rel = ”canonical” href = ”https://example.com/ />

Indicates the relevant version of the web page to appear in the index.

Use:

Parameters, paging, index.php file

Brand “noindex”

<meta name = ”robots” content = ”noindex” />

Disables indexing of the webpage.

Use:

Internal search, PDF, documents and other files, some categories or individual pages such as a cart, backend scripts, etc.

Robots.txt file

It serves as a recommendation not to index the site, but without <meta name = ”robots” content = ”noindex” /> they may still appear in the search (without a caption).

Use:

PDFs, documents and other files, some categories or individual pages such as a cart, backend scripts, etc.

A, CNAME, and htaccess records

Setting up different versions of domain routing on your hosting can save you time. If your hosting does not allow it, redirect specific versions of the website using a rule in the htaccess file.

Tools to help you detect duplicate content

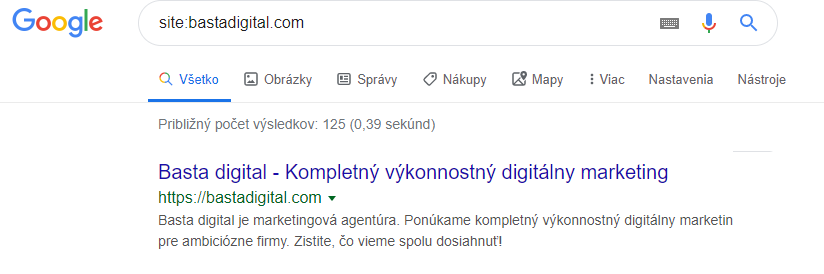

Site Search Operator:

Search for sites included in the index using the search operator site: .example.com, which will give you a basic overview of duplicate URLs.

Google Search Console

You can also see duplicates directly in the coverage section of the Google Search Console. Google marks this site as a duplicate, without the use of the canonical tag.

Screaming Frog

Screaming Frog is a tool that is designed specifically for SEO specialists. From the output you can filter duplicate content (H1, H2, META descriptions, captions, alt descriptions for images,…) as well as URLs without canonical tags.